Concurrency in Go

Concurrency primitives are built directly into the Go language, which opposed to other high-level languages like C and C++, make them easier to use without pulling in an external library.

Mapping from tasks to hardware is usually not up to the programmer. It’s up to the operating system, and in Go, it’s up to Go’s runtime scheduler. Even on a single CPU core, concurrency still improves performance because one thread of execution can work while another one waits on memory or I/O accesses, effectively hiding this latency.

OS processes have a context unique to the process that contains its virtual address space, its own code, stack, shared libraries, registers (program counter, stack pointer, etc…). Operating systems allow many processes to execute concurrently by enforcing their context. Typically, processes are allowed at most 20ms of CPU time before the OS switches to another process. This gives the user the impression of parallel execution.

OS threads are threads of execution within the same process. They still have a context, but they share file descriptors and virtual memory space, so their footprint is a bit lighter.

Goroutines

Goroutines are user-space threads, and an OS thread may contain many executing goroutines.

Go Runtime Scheduler

The Go runtime scheduler handles many goroutines with a logical processor that is mapped to a main OS thread. The Go runtime scheduler runs on a single OS thread, and the programmer can possibly leverage parallelism by telling the runtime to initialize multiple logical processors.

Concurrency Considerations

- Interleaving

- Race conditions

Synchronizing Goroutines

WaitGroups

An instance of sync.WaitGroup contains an internal counter. You increment this counter with wg.Add(1) for each goroutine to be awaited, and you decrement this counter with wg.Done() when the goroutine completes. The thread calling wg.Wait() will halt execution until the internal counter reaches 0 again.

func main() {

var wg sync.WaitGroup

wg.Add(1)

x := 0

go increment(&wg, &x)

wg.Wait()

fmt.Println(x)

}

func increment(wg *sync.WaitGroup, x *int) {

time.Sleep(100 * time.Millisecond)

*x++

wg.Done()

}

Channels

See Communicating Sequential Processes.

Channels are typed mechanisms to communicate between goroutines. Sending data on a channel is done with channel <- data and you can receive data from a channel with <- channel.

func gorout(x, y int, c chan int) {

c <- x + y

}

func main() {

c := make(chan int)

go gorout(1, 2, c)

go gorout(3, 4, c)

a := <- c

b := <- c

fmt.Println(a + b)

}

Channels are unbuffered by default, meaning they cannot hold any additional data and will block. Blocking means that the sending goroutine blocks until another goroutine receives data, and a receiving goroutine will block until another goroutine sends data. In this way, channels can be used as a synchronization mechanism.

Channels can be buffered, meaning they can have a finite capacity to hold data and unblock goroutines. You can create buffered channels by providing an additional capacity on the make function like make(chan int, 3). With buffered channels, sending only blocks when the buffer is full, and receiving only blocks when the buffer is empty. Using buffered channels can be beneficial if senders and receivers operate at different speeds, however this is only beneficial for periodic differences. If the speed mismatch is too large, you can overwhelm the channel and one of the goroutines will end up blocking.

// Continually blocks for new info until the

// channel is closed with `close(c)`.

for x := range c {

fmt.Println(x)

}

// Somewhere else...

close(c)

Select

for {

select {

case a := <- c1:

fmt.Println(a)

case b := <- c2:

fmt.Println(b)

case c3 <- b:

fmt.Println("Sent to channel 3")

case <-abort:

return

default:

fmt.Println("nop")

}

}

Mutexes

It’s important to keep in mind that even simple operations on any shared memory can get interleaved at the machine code level, causing those one-in-a-billion bugs. Critical paths should always be made synchronous if possible.

var mut sync.Mutex creates a new mutex instance. mut.Lock() attempts to obtain the semaphore, if it’s already held by another thread, execution will block until unlocked. mut.Unlock() is used to free.

Other Synchronization Mechanisms

Initialization steps can either be done before you start up other goroutines, or you can also use the sync.Once utility. After creating an instance with var once sync.Once, you can call once.Do(fn) in multiple goroutines, and the utility will ensure that the fn function only gets called one time, and will block until it’s finished.

Deadlocks

Beware of using synchronization patterns in tandem. If two goroutines use mechanisms that depend on each other, you may get into a deadlock scenario, in which neither thread can continue.

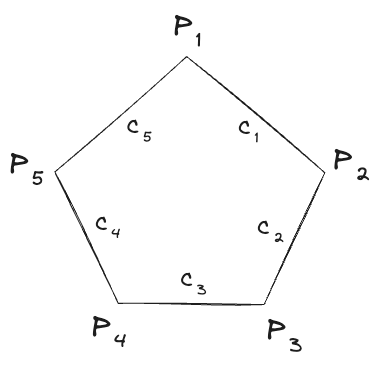

Dining Philosophers Problem

This is a classic thought experiment that illustrates concurrency problems. Five philosophers sit around a circular table, and there is a single chopstick between every pair of philosophers. In order to eat, they must pick up the chopstick to their left and their right, meaning that adjacent philosophers cannot eat at the same time. The problem illustrates ways in which to avoid deadlocks when different threads require shared resources. Depending on the strategy taken to acquire the chopsticks, it’s fairly easy to get into deadlocks (i.e. if all philosophers were to pick up their left chopstick).

Djikstra’s solution: pick the lowest numbered chopstick first. No deadlock, but philosopher 4 might starve.

- Rob Pike - Concurrency is not Parallelism

- Go’s work-stealing scheduler

- Dave Cheney - Performance Without the Event Loop

- epoll

- Google I/O 2013 - Advanced Go Concurrency Patterns

Channel Internals

Channels are goroutine-safe memory structures to store and pass values between goroutines.

hchan Struct

| Member | Description |

|---|---|

buf |

Circular queue |

sendx |

Send index |

recvx |

Receive index |

lock |

Mutex |

sendq |

Waiting senders |

recvq |

Waiting receivers |

Go channels are pointers to underlying hchan structs stored on the heap, which hold a queue of sudog structs that lock over a copy of the memory shared between goroutines.

To block the execution of a channel until it’s free, the operation calls into the runtime scheduler (a method called gopark), which sets the currently running goroutine’s state to waiting. Because the scheduler is implemented in user-space, it’s possible to do this without a true OS context switch.